Application DNA // Work your way out from the back

Persistence Modeling and Framework Considerations

Summary

Who should read this?

This article is for you if you are one or more of the following

A Software Architect creating a set of patterns for her team

An Engineer evaluating technical architecture for a new project

A Product owner trying to learn more about what your engineers are up to

A Startup CTO guiding decisions from all of the above viewpoints

What will you learn

We will briefly touch on how we solve for Database Persistence Modeling in Application DNA. We will summarize the libraries used and provide an overview of how to engage them.

Cloud Agnostic Advice

We are opinionated on some of these decisions but we are aiming to provide advice that will work regardless of your cloud provider. We do have a database preference of PostgreSQL however you could probably substitute any SQL database for your persistence layer. We do believe that SQL is the best scalable persistence layer over other NoSQL providers.

Modeling, Frameworks, and Database choices

Why do we do this?

We leverage an ORM tool (Object Relational Mapping) to help us map a normalized database model to an in-memory model in our programming language of choice. Nearly all major full-stack approaches leverage some form of ORM. Java has Hibernate/iBatis, Scala uses Slick, Ruby has Rails, and Python has DJango or SQLAlchemy+Alembic.

Application DNA Users SQLAlchemy for DB Modeling + ORM and Alembic to manage migrating (upgrading and downgrading) database versions.

Why, you may ask, do we care about versioning our database? We do this because we want to know that we can work together on large teams and integrate changes to a production database environment while preserving all of the integrity of the data that is there. We also want to ensure that we can work well with our team members and integrate their changes into ours without stepping on their work.

SQL Alchemy - the place I prefer to start

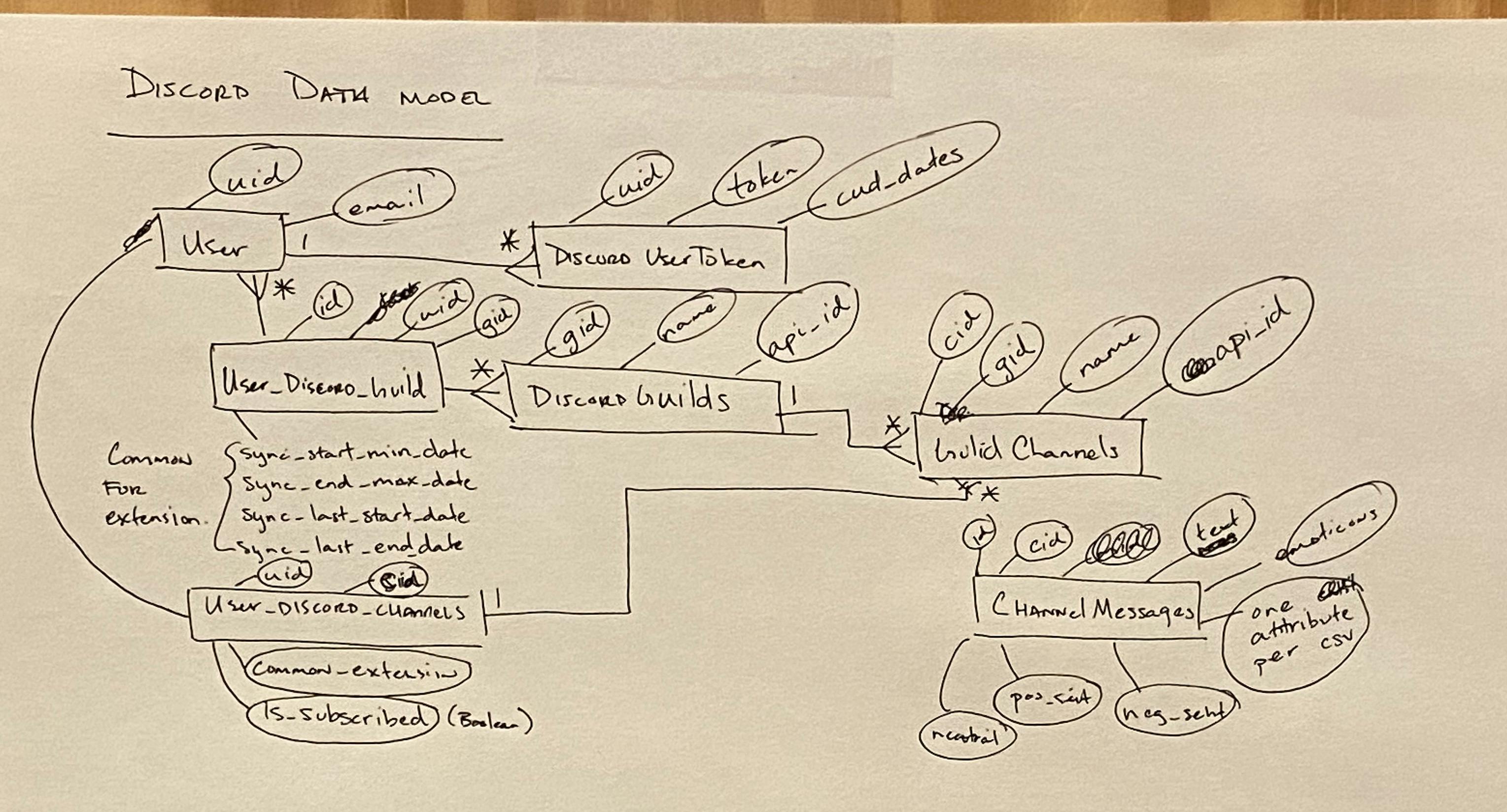

Pen and Paper Entity Relationship Modeling

When I am adding a new feature to an application I am working on, I will typically think through the model. An example of how I may think through a model would look like this using Pen and Paper.

I tend to just use simple Entity Relationship models (ER) with some very simple boxes to define entities (these will become Python classes) and Attributes (circles) which will become the attributes I place on my classes. I try to also think about the relationships between models for example Users above have 1 to Many (1 to *) relationship with Discord User Tokens, because a user in my system may sign into Discord multiple times. You are in control, you get to model the world as you see it. This let's you ignore the complexity of implementation and this is the first step. You can easily do this on a whiteboard with a team as well to be more accurate with your modeling exercise.

Implement the classes in Python with SQLAlchemy

I tend to start with something like the package I will work in for my application, and with respect to application DNA it is dna.common.model.db.discord.model.py

I wanted all of my classes to have some common attributes since I am data warehousing so there is a common base class:

class HasSyncTimes(HasCreateUpdateDeleteTime):

# The earliest available date for the data source

sync_start_min_datetime = Column(DateTime(timezone=True), default=None, nullable=True, index=True)

# The latest available date for the data source (hopefully always up to now)

sync_end_max_datetime = Column(DateTime(timezone=True), default=None, nullable=True, index=True)

# Earliest and Latest datetimes that this record has been synced from its source

sync_last_start_datetime = Column(DateTime(timezone=True), default=None, nullable=True, index=True)

sync_last_end_datetime = Column(DateTime(timezone=True), default=None, nullable=True, index=True)

All of my stochastic data (time-series) will have this base class because they are synced from outside downstream sources and in theory I could delete or update them.

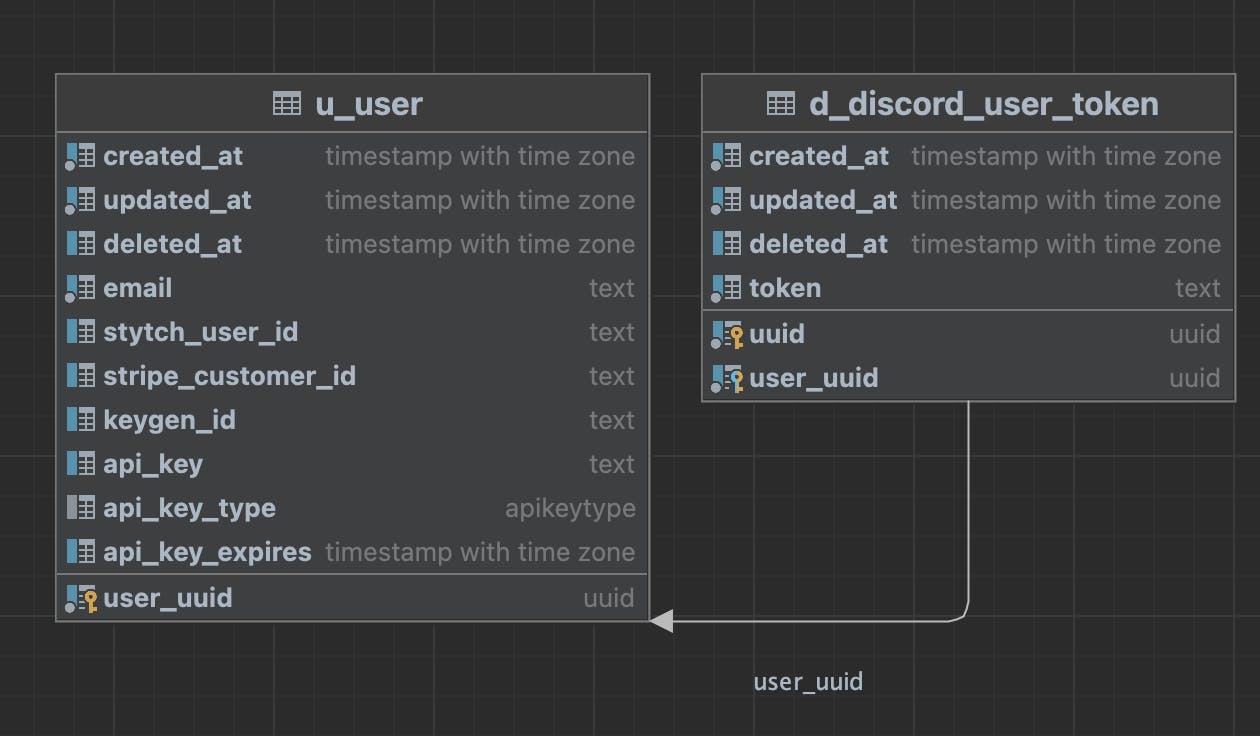

Here is a simplified version of the User to DiscordUserToken relationship.

class User(Base, HasCreateUpdateDeleteTime):

__tablename__ = "u_user"

user_uuid = Column(UUID(as_uuid=True), default=uuid.uuid4(), primary_key=True)

email = Column(Text, nullable=False, unique=True, index=True)

class DiscordUserToken(Base, HasCreateUpdateDeleteTime):

__tablename__ = "d_discord_user_token"

uuid = Column(UUID(as_uuid=True), default=uuid.uuid4(), primary_key=True)

token = Column(Text, nullable=False, unique=True, index=True)

user_uuid = Column(UUID(as_uuid=True), ForeignKey(User.user_uuid), unique=True, nullable=False)

Once you've completed this step for all of the classes in your diagram you can move on to generate the datastructure and see if it matches your expectations.

Alembic Migrations

To generate your migrations you will use a tool called Alembic. This is a database migration tool that will generate upgrade and downgrade scripts for you.

alembic revision -m "Defininig my first migration" --autogenerate

This will spit out code in your alembic directory if you have everything setup properly like this:

"""Defininig my first migration

Revision ID: 3b05ef4fd8f8

Revises: 7a9de732e431

Create Date: 2022-09-06 15:47:55.788312

"""

from alembic import op

import sqlalchemy as sa

from sqlalchemy.dialects import postgresql

# revision identifiers, used by Alembic.

revision = '3b05ef4fd8f8'

down_revision = '7a9de732e431'

branch_labels = None

depends_on = None

def upgrade() -> None:

op.create_table('u_user',

sa.Column('created_at', sa.DateTime(timezone=True), nullable=False),

sa.Column('updated_at', sa.DateTime(timezone=True), nullable=False),

sa.Column('deleted_at', sa.DateTime(timezone=True), nullable=True),

sa.Column('user_uuid', postgresql.UUID(as_uuid=True), nullable=False),

sa.Column('email', sa.Text(), nullable=False),

sa.PrimaryKeyConstraint('user_uuid', name=op.f('pk_u_user'))

)

op.create_index(op.f('ix_u_user_created_at'), 'u_user', ['created_at'], unique=False)

op.create_index(op.f('ix_u_user_deleted_at'), 'u_user', ['deleted_at'], unique=False)

op.create_index(op.f('ix_u_user_email'), 'u_user', ['email'], unique=True)

op.create_index(op.f('ix_u_user_updated_at'), 'u_user', ['updated_at'], unique=False)

op.create_table('d_discord_user_token',

sa.Column('created_at', sa.DateTime(timezone=True), nullable=False),

sa.Column('updated_at', sa.DateTime(timezone=True), nullable=False),

sa.Column('deleted_at', sa.DateTime(timezone=True), nullable=True),

sa.Column('uuid', postgresql.UUID(as_uuid=True), nullable=False),

sa.Column('token', sa.Text(), nullable=False),

sa.Column('user_uuid', postgresql.UUID(as_uuid=True), nullable=False),

sa.ForeignKeyConstraint(['user_uuid'], ['u_user.user_uuid'], name=op.f('fk_d_discord_user_token_user_uuid_u_user')),

sa.PrimaryKeyConstraint('uuid', 'user_uuid', name=op.f('pk_d_discord_user_token'))

)

op.create_index(op.f('ix_d_discord_user_token_created_at'), 'd_discord_user_token', ['created_at'], unique=False)

op.create_index(op.f('ix_d_discord_user_token_deleted_at'), 'd_discord_user_token', ['deleted_at'], unique=False)

op.create_index(op.f('ix_d_discord_user_token_token'), 'd_discord_user_token', ['token'], unique=True)

op.create_index(op.f('ix_d_discord_user_token_updated_at'), 'd_discord_user_token', ['updated_at'], unique=False)

# ### end Alembic commands ###

def downgrade() -> None:

# Truncated but the opposite of above

These files can get quite long and for this purpose of demonstration I've removed most of the output to give you the idea of what you'd get as you work through this step.

After you have the files generated you can just run them like so:

alembic upgrade head

If you did things properly you should be able to visualize your database using a db visualizer to see the relationships you just created.

That's it, repeat this process for every feature you are thinking through and you have yourself a sharable and reproducible pattern you can leverage with your scalable team. We call our application DNA because we believe this persistence layer is the DNA of our system. As your team scales maybe you'll want to have different DNA for each team, project, and vertical. For startup teams, we find it most useful to start with a monolith and push it until it breaks.